Building a Discord Bot with TeapotLLM

Introduction

TeapotLLM is an open-source, hallucination-resistant language model optimized to run entirely on CPUs, making it ideal for building low cost chatbot applications.

In this post, we’ll walk through building a Discord bot using TeapotLLM to answer frequently asked questions from a knowledge source. We’ll integrate retrieval-augmented generation (RAG) for document-based responses, utilize Brave Search for real-time context, and explore how to monitor performance using LangSmith.

Come join our Discord to build along with us!

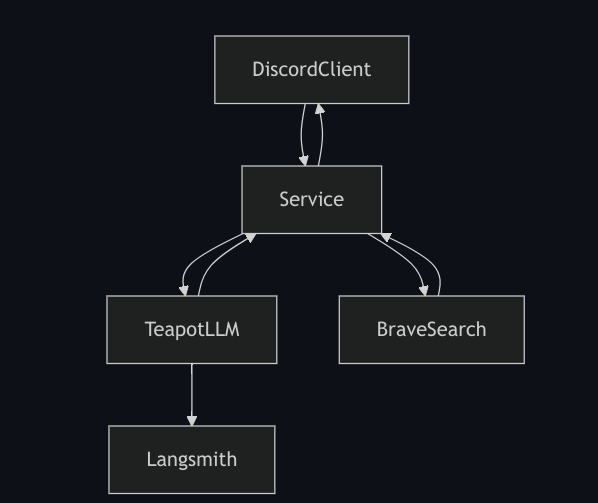

High-Level Architecture

Our bot will follow a simple workflow:

- A user asks a question in Discord.

- The bot checks its stored TeapotLLM documentation for relevant answers (RAG).

- If needed, it queries Brave Search for additional context.

- The response is generated using TeapotLLM and sent back to Discord.

Architecture Diagram:

Setting Up a Discord Bot

To get started, install the necessary dependencies:

pip install discord.py teapotai

Basic Discord Bot Code

First, let’s write a simple python script that creates a Discord bot that responds with a simple “Hello, World!”. The discord.py library makes it easy to set up an agent that will listen to messages and reply with a typing animation. Additionally, we ensure that we are not replying to our own messages to avoid an infinite loop. You’ll need a bot token and a bot application installed on your server to get this running, follow the documentation here to set that up.

import discord

intents = discord.Intents.default()

intents.messages = True

client = discord.Client(intents=intents)

@client.event

async def on_ready():

print(f'Logged in as {client.user}')

@client.event

async def on_message(message):

if message.author == client.user:

return

if f'<@{client.user.id}>' not in message.content:

return

async with message.channel.typing():

response = "Hello World!"

sent_message = await message.reply(response)

client.run("YOUR_DISCORD_BOT_TOKEN")

Integrating TeapotLLM for FAQs

Now that we have our Discord bot set up, we can plug in the teapotai library to provide intelligent responses using our knowledge base. Teapotllm is a small model that can run on your CPU and has been trained to only answer using the provided documents to reduce hallucinations. The teapotai library automatically manages creating a local vector database for your documents and a RAG pipeline that can be used to provide the LLM with knowledge to answer user queries.

Loading TeapotLLM with FAQ Documents

from teapotai import TeapotAI, TeapotAISettings

documents = [

"Teapot is an open-source small language model (~800 million parameters) fine-tuned on synthetic data and optimized to run locally on resource-constrained devices such as smartphones and CPUs.",

"Teapot is trained to only answer using context from documents, reducing hallucinations.",

"Teapot can perform a variety of tasks, including hallucination-resistant Question Answering (QnA), Retrieval-Augmented Generation (RAG), and JSON extraction.",

"The model was trained on a synthetic dataset and optimized for efficient question answering.",

"TeapotLLM is a fine tune of flan-t5-large that was trained on synthetic data generated by Deepseek v3",

"TeapotLLM can be hosted on low-power devices with as little as 2GB of CPU RAM such as a Raspberry Pi.",

"Teapot is a model built by and for the community."

]

teapot_ai = TeapotAI(

documents=documents,

settings=TeapotAISettings(

rag_num_results=3

)

)

To ensure each document fits into the context, we split our documentation into logical chunks. This can be done automatically by parsing at paragraphs or page breaks, but will vary depending on the format of your knowledge base. You can play with the document set up and examine how it impacts the RAG results. We’ve also configured the number of results to return for RAG to ensure we have enough context remaining for live search results.

Querying TeapotLLM in the Discord Bot

Now that we’ve set up our knowledge base, we can invoke our teapot_ai instance within our Discord bot code. Here we are using the teapot_ai.query() method to respond to the user query. This method automatically pulls in relevant results from the RAG pipeline that will be used to inform the response.

@client.event

async def on_message(message):

if message.author == client.user:

return

if f'<@{client.user.id}>' not in message.content:

return

async with message.channel.typing():

response = teapot_ai.query(

query=message.content

)

sent_message = await message.reply(response)

Integrating Brave Search for Additional Context

Our local teapotai instance now has the ability to answer using our knowledge base, but what if a user asks a more general question that is outside of the scope of our documents? That is where Brave Search comes in- we can perform a Brave Search with the user query and return the top result in the chat’s context; This ensures our model can answer questions about current events or other topics not covered by our RAG pipeline. You’ll need a brave api token, learn more about getting set up on the Brave Search API here.

Fetching Brave Search Results

import os

import requests

def search_brave(query, count=1):

url = "https://api.search.brave.com/res/v1/web/search"

headers = {

"Accept": "application/json",

"X-Subscription-Token": os.environ.get("brave_api_key")

}

params = {"q": query, "count": count}

response = requests.get(url, headers=headers, params=params)

if response.status_code == 200:

results = response.json()

return results[0]get("web", {}).get("results", []).get("description","")

else:

print(f"Error: {response.status_code}, {response.text}")

return ""

Enhancing the Bot with Brave Search

We can drop any additional context information in the context parameter of our query method. This will always be included, regardless of what the RAG pipeline returns. Now we have live search results in our model’s context!

@client.event

async def on_message(message):

if message.author == client.user:

return

if f'<@{client.user.id}>' not in message.content:

return

async with message.channel.typing():

response = teapot_ai.query(

query=message.content,

context=search_brave(message.content)

)

sent_message = await message.reply(response)

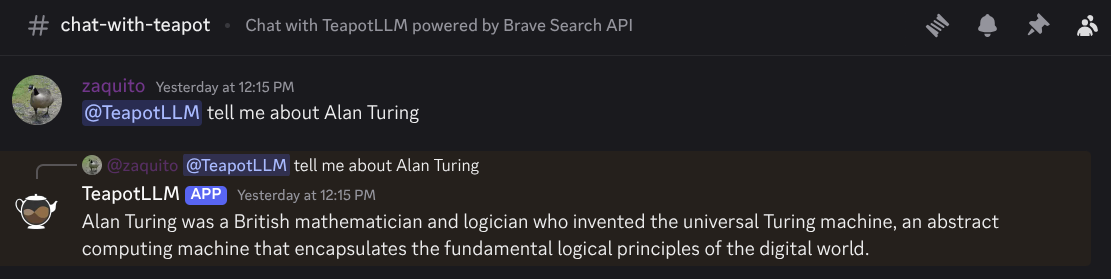

Deployment & Monitoring

Teapotllm is small enough to run on almost any device, as it requires ~2GB of RAM and can be run entirely on the CPU. Simply run your Discord bot code on a local laptop or hosted server. You should now be able to tag your chatbot in the server and watch it reply.

Example Test Cases

To ensure accuracy before deployment, it’s always good to select several test case questions to evaluate your model and RAG pipeline. We recommend picking at least 10 questions, preferably sourced from real user queries. Running a simple script like this allows us to validate that Teapot has the right data and configuration to effectively answer user questions.

teapot_ai.query("What is TeapotLLM?") # => "TeapotLLM is an open-source small language model."

teapot_ai.query("How many parameters is teapotllm") # => "TeapotLLM has 800 million parameters."

teapot_ai.query("What devices can it run on") # => "TeapotLLM can be hosted on low-power devices with as little as 2GB of CPU RAM such as a Raspberry Pi."

teapot_ai.query("Does Teapot AI support RAG pipelines?") # => "Yes"

teapot_ai.query("What model was teapotllm fine tuned off of?") # => "TeapotLLM was fine tuned off of flan-t5-large."

teapot_ai.query("How was Deepseek used?") # => "Deepseek was used to generate synthetic data for TeapotLLM."

teapot_ai.query("How many parameters does Deepseek have?") # => Hallucination resitance: "I'm sorry, but I don't have information on the number of parameters in Deepseek."

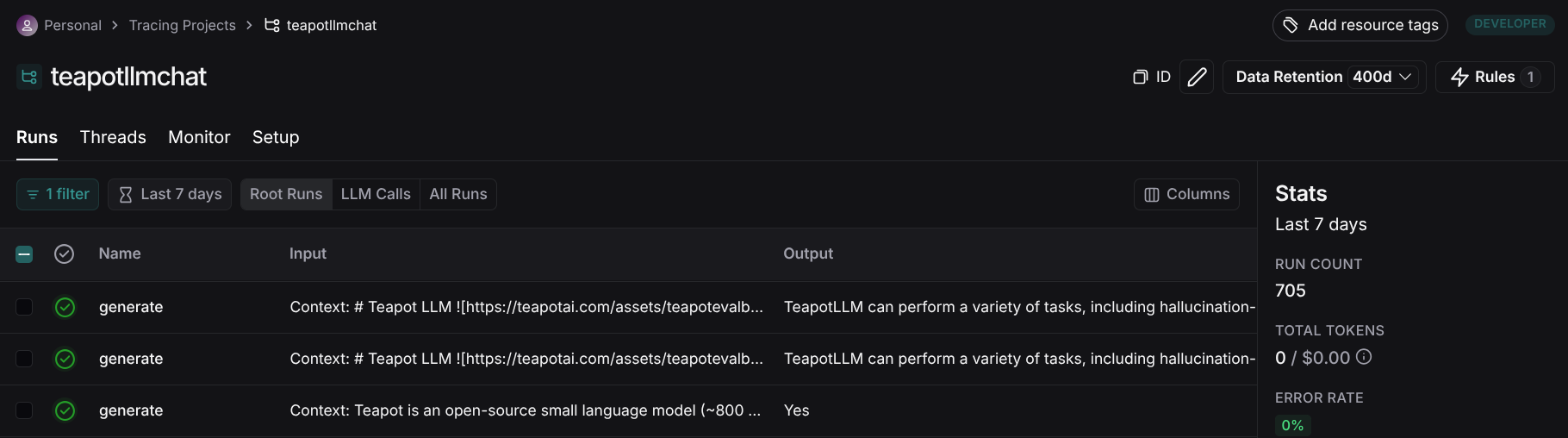

Monitoring Performance with LangSmith

That’s great that we now have some local test cases, but we want to ensure we can monitor performance when we deploy our chatbot to the real world. That is where Langsmith comes in. Langsmith is a tool that makes it easy to track traces from your application to evaluate their latency and performance, and you can even use the responses to further fine-tune or validate your models.

Teapot AI natively supports langsmith in the library. Just add the following environment variables to automatically add any responses generated from your RAG pipeline.

LANGCHAIN_API_KEY=your_langsmith_api_key

LANGCHAIN_TRACING=true

LANGCHAIN_PROJECT=your_project_name

LANGCHAIN_ENDPOINT=https://api.smith.langchain.com

Conclusion

By leveraging TeapotLLM, Teapot AI’s RAG pipeline library and Brave Search, we’ve built a Discord bot capable of answering FAQs efficiently while running entirely on your CPU. TeapotLLM’s low resource usage allows it to run on low end devices like the Raspberry Pi, and with LangSmith, we can track performance and improve accuracy over time.

If you found this article interesting or want help with your project, we’d love to meet you in our Discord!